Log Analysis Buddy - Massive Update

I’ve made some significant changes to the Log Analysis Buddy, a script I created a couple years ago to use LLMs for assisting with security log analysis.

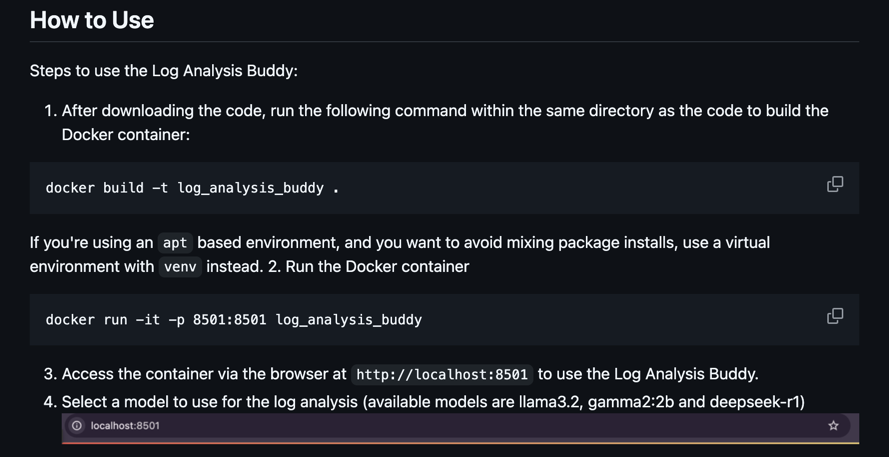

First, I changed it from a local script to a Docker container. This requires Docker to be installed locally instead, but allows for more flexibility in using the tool. You can build and run the Docker container from with the repository you download (the instructions for this are in the README of the repository).

Log Analysis Buddy - How To instructions

From there, you’ll access a web UI on your localhost via port 8501. The frontend is a Streamlit application that runs on Python.

You can select from three different models currently (llama3.2, gamma2:2b and deepseek-r1). The backend leverages Ollama’s API to download models locally, versus making calls to hosted AI as it did previously.

Log Analysis Buddy - Model Selection

After the model is loaded, you’ll be able to begin analyzing logs! I believe CSV format is best for now, but feel free to experiment.

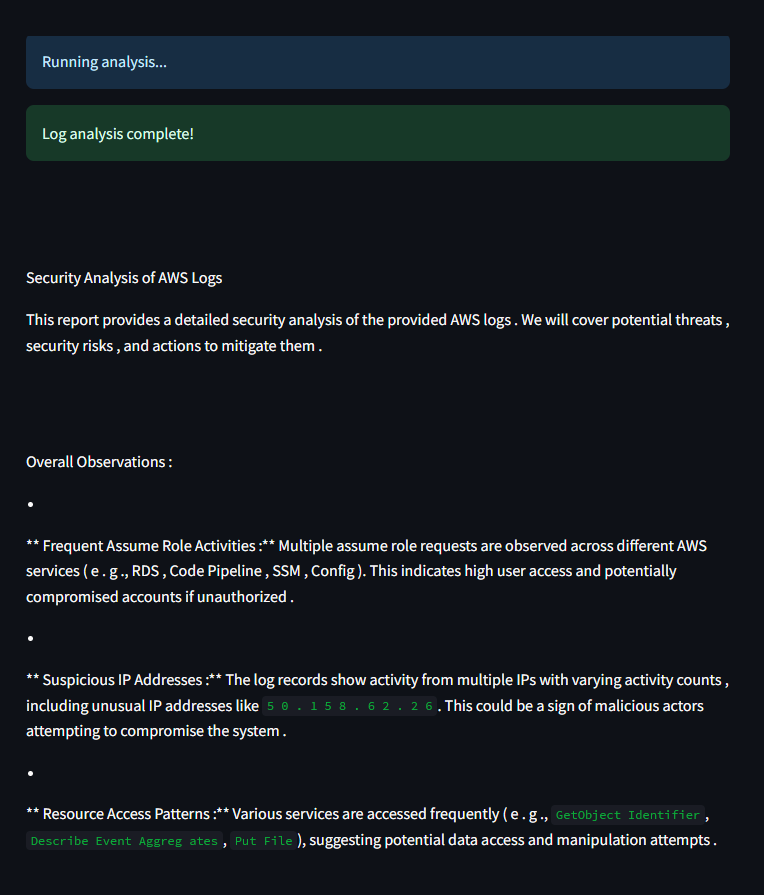

Using the gemma2 model, below is an example of what the analysis looks like after running the tool:

Log Analysis Buddy - Analysis Results

This tool is still a proof of concept, and I’ll be adding more features (a LOT more) in the coming weeks.

Feel free to add any input!