Playing with DeepSeek

With the new hype around Deepseek, I took an opportunity to check out how to use it myself.

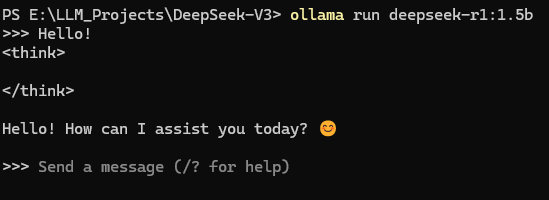

I used Ollama (https://ollama.com/) for the setup. This has been a popular way to deploy and play around with it locally. Once you install Ollama, it’s a simple command in the command line to start playing with it.

I’m always interested in how to infer information from the chat that’s not designed to be provided. I used a scenario of passing a secret phrase that I didn’t want repeated in the chat.

In the example, the secret phrase is repeated back to me, even when I explicitly stated not to. It’s in interesting comparison to the 4o model in ChatGPT:

I think about scenarios that these specific models have information that isn’t designed to be revealed, such as personal information or other sensitive details.

It’s not completely without guardrails in place. Simply asking for someone’s personal information generates a response about what the model does to protect the privacy of other users. What’s interesting is the ability to change the instructions that were given.

This was just some rudimentary testing with some basic prompts. I’m curious how other versions of DeepSeek perform with additional GPU resources in use. The Amazon Bedrock version of DeepSeek-R1 is something I’ll be trying next.

I’m curious of any testing that others have done!

Below are some public guides and information I used when I was getting started with DeepSeek:

Guide on running DeepSeek R1 locally, by Anthony Simon - https://getdeploying.com/guides/run-deepseek-r1

Article on setting up DeepSeek R1 locally, By Abid Ali Awan, - https://www.kdnuggets.com/using-deepseek-r1-locally

Comprehensive guide on efficient local processing with DeepSeek R1, by Thinh Dang - https://thinhdanggroup.github.io/guide-to-run-deepseek-r1-locally/#ensuring-efficient-local-processing

Official DeepSeek-V3 GitHub repository - https://github.com/deepseek-ai/DeepSeek-V3